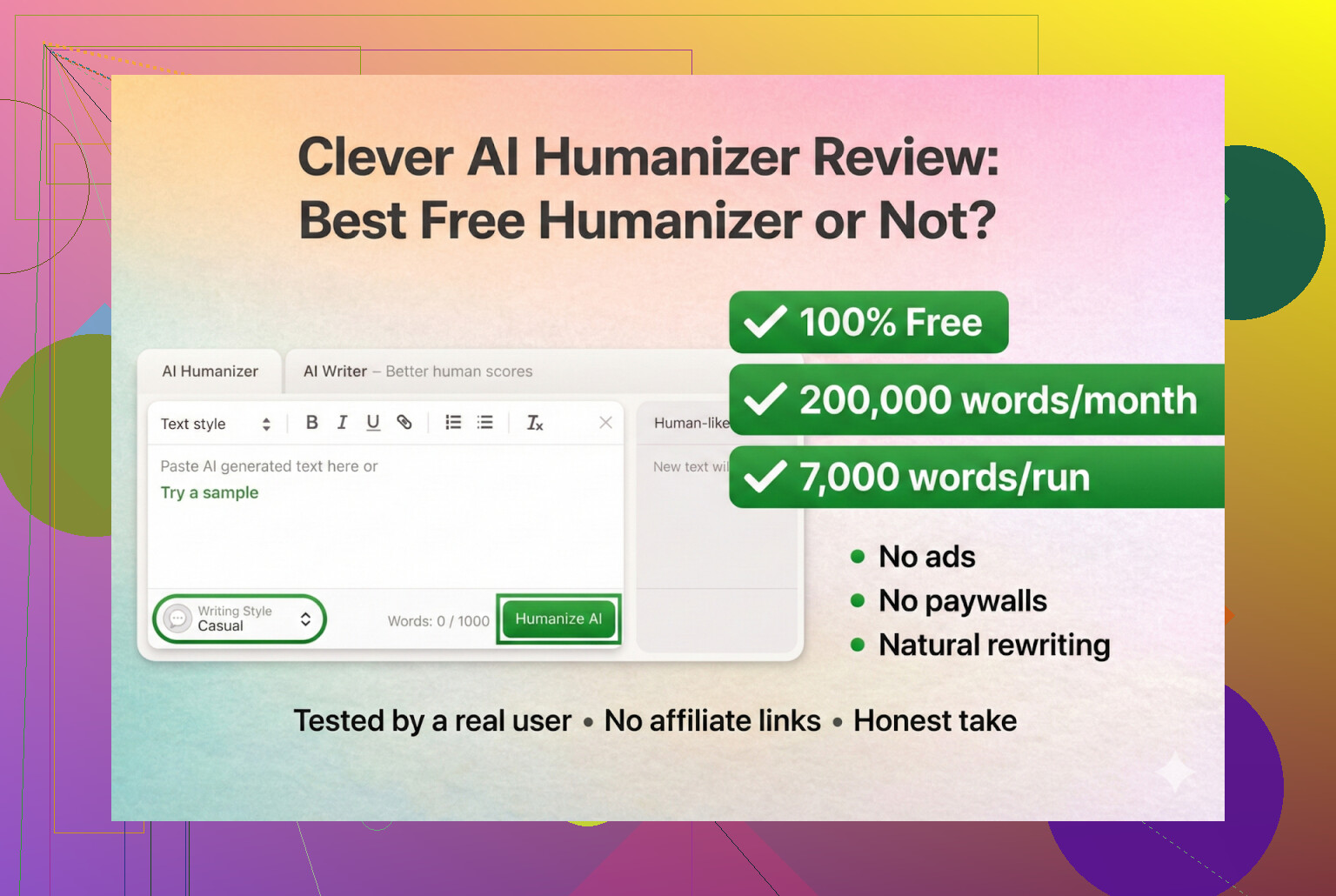

I’ve been testing the Clever AI Humanizer tool on different types of AI-written content, and I’m getting mixed results. Sometimes it passes detectors and sounds natural, other times it gets flagged or feels awkward and over-edited. I’d love to hear real user experiences, including what settings, prompts, or workflows actually make it sound genuinely human while staying safe for SEO, freelance work, or academic use.

Clever AI Humanizer: Actual User Experience, Not Marketing Stuff

I’ve been poking around free “AI humanizer” tools for a while, mostly out of curiosity and partly out of self-preservation. A lot of them either butcher the text, start charging out of nowhere, or trigger more detectors than the original AI draft.

Clever AI Humanizer is one of the few I’ve kept bookmarked, so I decided to do a more structured test and write up what actually happens when you use it.

Site I’m talking about:

Clever AI Humanizer → https://aihumanizer.net/

Their built-in writer: AI Writer - 100% Free AI Text Generator with AI Humanization!

Yes, that URL is correct. There are fake ones floating around.

Real Site vs Random Clones

This part is important, especially if you found the tool through ads.

I’ve had people DM me asking for the “real” Clever AI Humanizer link after accidentally landing on some copycat sites using similar names in Google Ads, then complaining they got hit with surprise subscriptions and “pro plans.”

In my experience:

- Clever AI Humanizer at https://aihumanizer.net/

- No paid tiers so far

- No sneaky subscription wall

- No “upgrade to unlock basic features” stuff

If you’re seeing pricing pages, monthly plans, or “Clever Humanizer Pro” that demands a card, you’re probably not on the original site.

How I Tested It

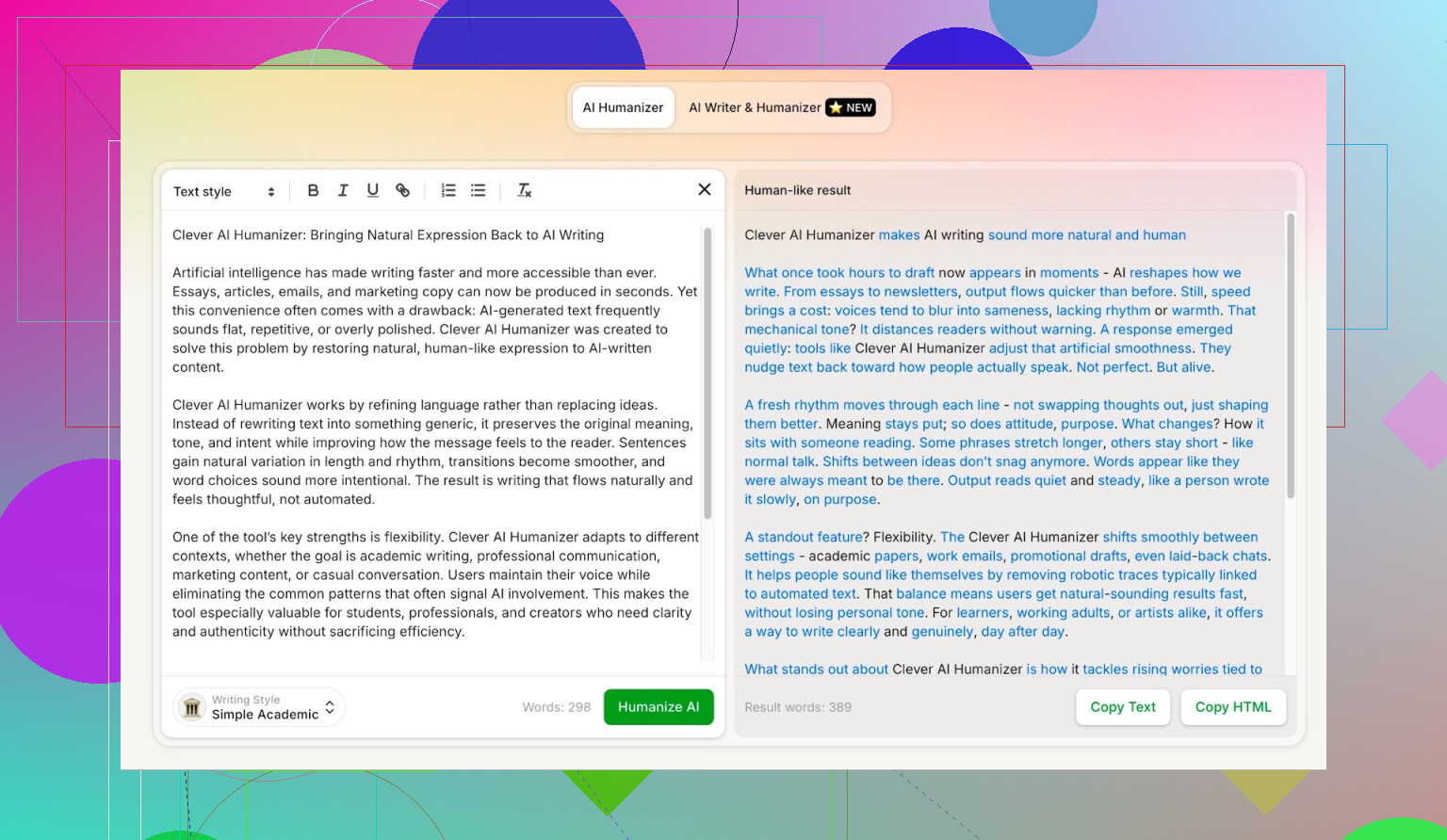

For this run, I went full AI vs AI.

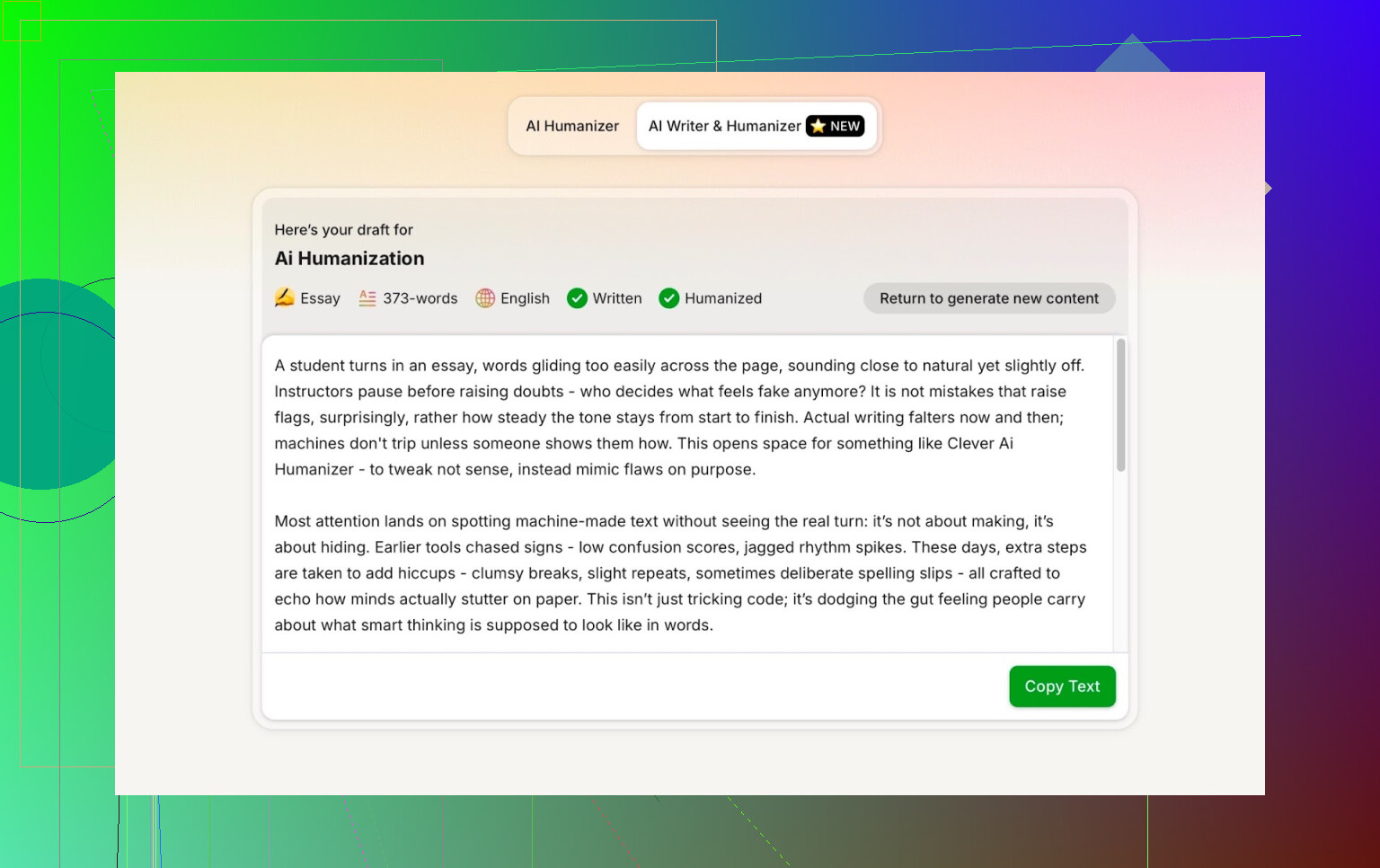

- I used ChatGPT 5.2 to generate a completely AI-written article about Clever AI Humanizer.

- Then I ran that text through Clever AI Humanizer in Simple Academic mode.

- After that, I checked:

- AI detector scores

- Readability

- Grammar and style

- How much the meaning shifted

Simple Academic is a weird but interesting style. It’s not full-on scholarly jargon, but it still sounds like something a student might turn in for a class that isn’t too formal. That “in-between” style actually tends to work pretty well for slipping past detectors.

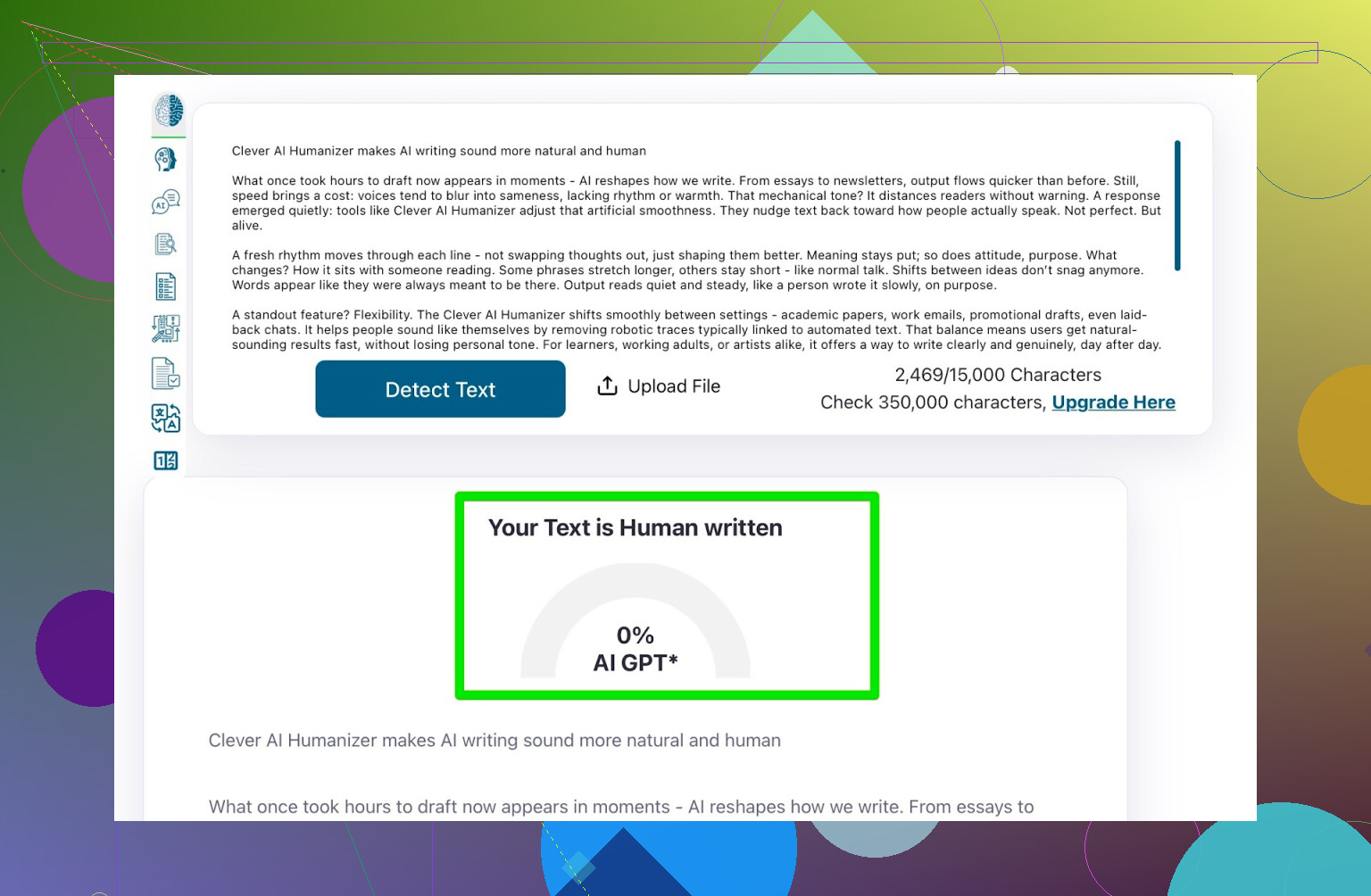

Detector Check: ZeroGPT & GPTZero

First test: run the humanized text through the usual suspects.

ZeroGPT

If you’ve used detectors at all, you’ve probably seen ZeroGPT near the top of Google.

Personally, I don’t trust it as some kind of final authority. I’ve seen it label the U.S. Constitution as “100% AI,” which should tell you everything you need to know about how noisy these tools can be.

That said, a lot of teachers, clients, and companies still use it, so it matters.

- Result for Clever AI Humanizer output: 0% AI

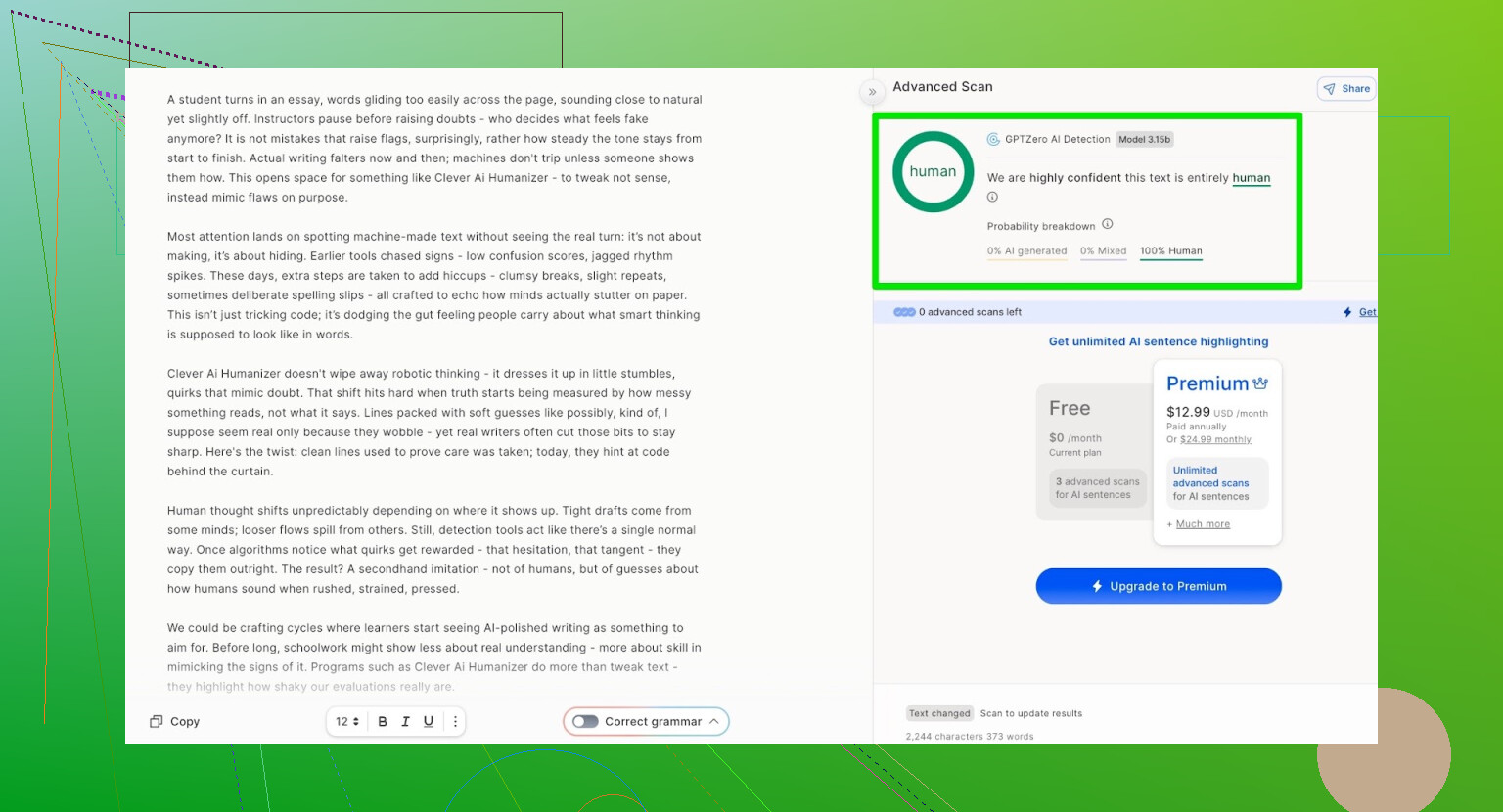

GPTZero

Then I tried GPTZero, which is the other big name people love to throw around in policy documents and university emails.

- Result: 100% human, 0% AI

So if your only goal is “make the detectors chill,” Clever AI Humanizer absolutely does the job on these two.

But that’s not the full picture.

Does The Text Actually Read Like A Person Wrote It?

Passing detectors is nice, but if the text comes out sounding like a robot trying to cosplay a grad student, it’s still useless.

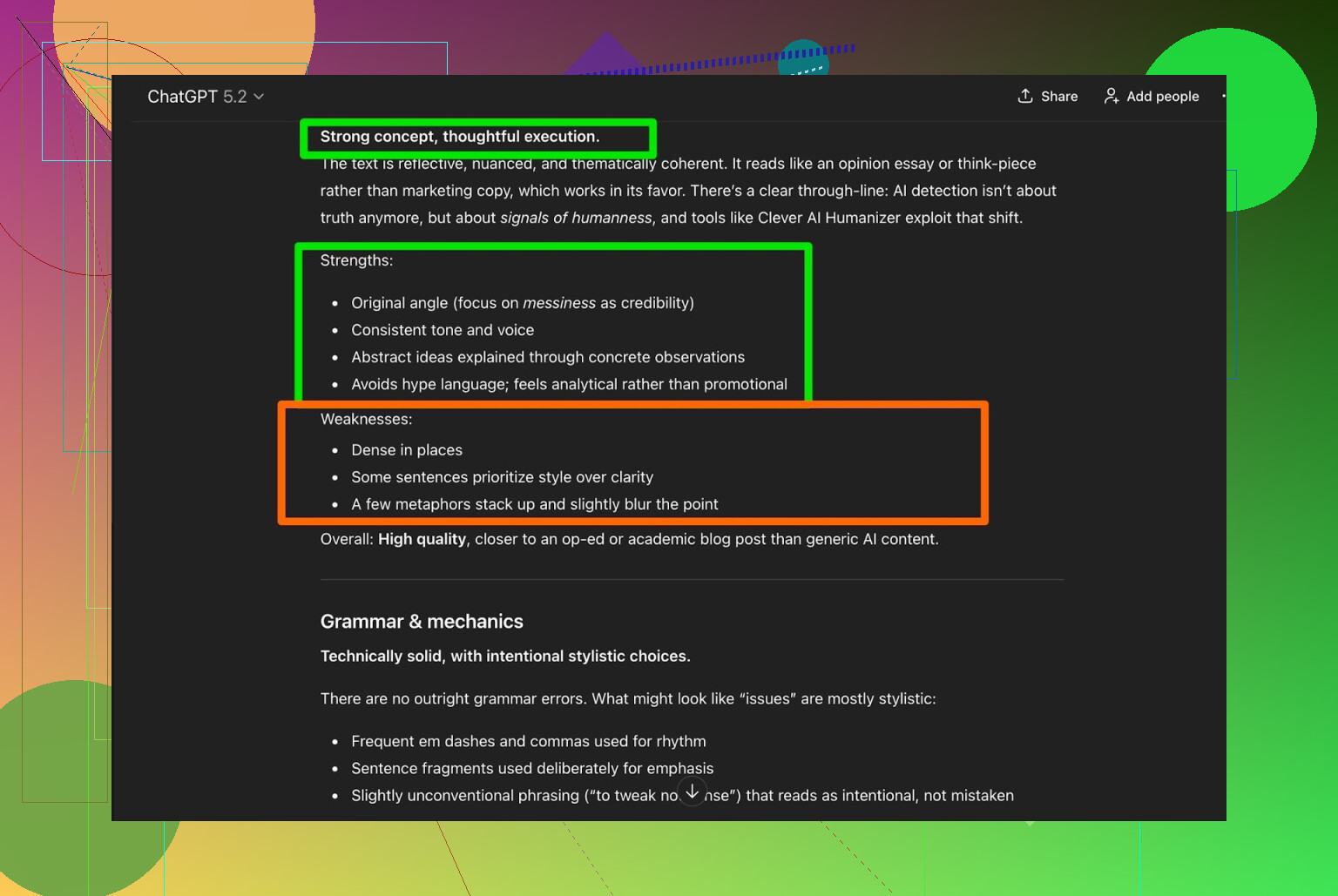

So I fed the output back into ChatGPT 5.2 and asked it to do a quality check.

- Grammar: solid

- Coherence: everything held together

- Style: appropriate for “simple academic,” but…

ChatGPT still recommended a human revision pass, which honestly is the only sensible answer. Any tool that claims “no editing needed” is just trying to sell you on a fantasy.

My take:

- As a human starting point, it’s totally workable.

- If you care about tone, nuance, and matching your own voice, you’re going to want to tweak it.

- But you’re not stuck re-writing from scratch.

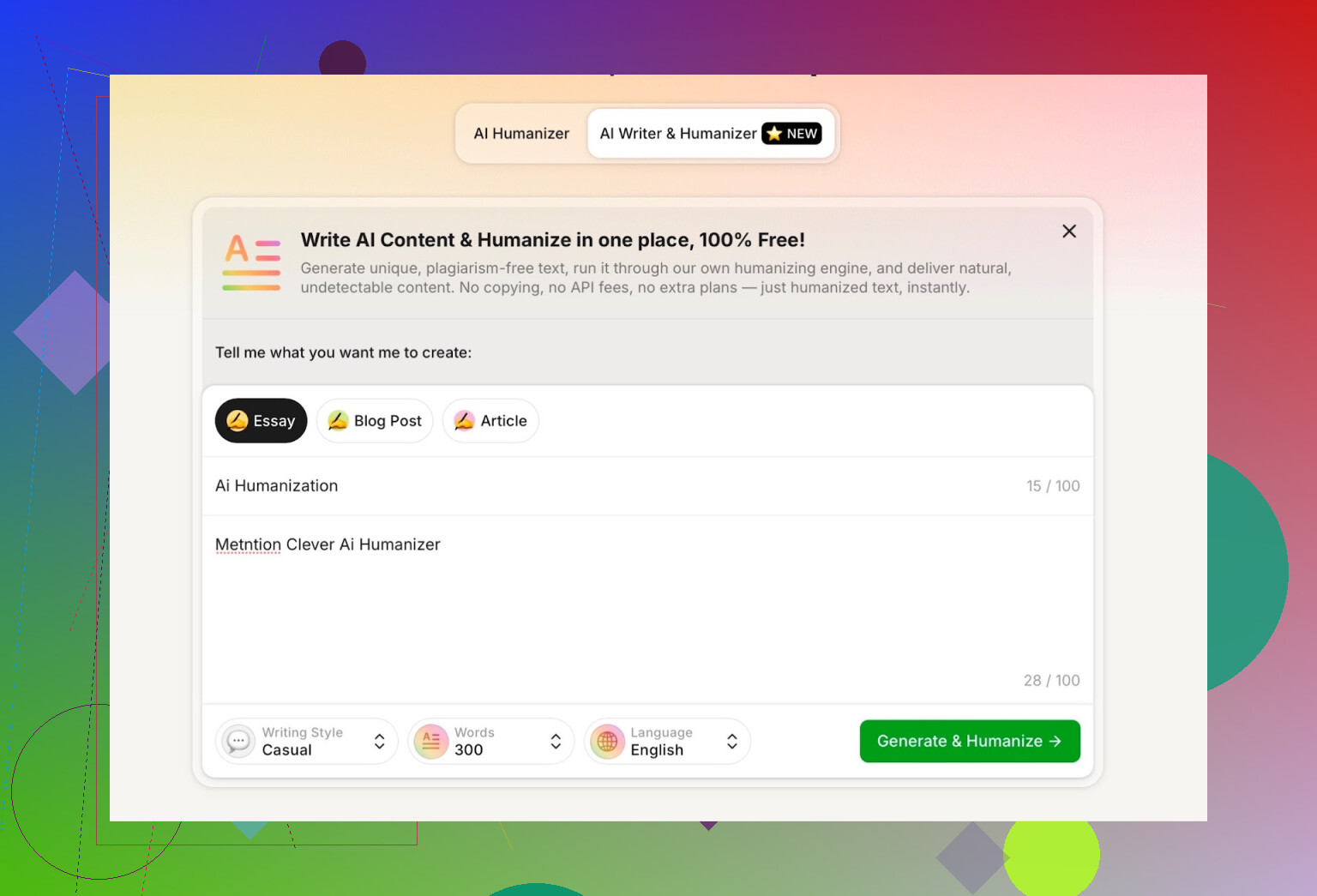

Their Built-In AI Writer: Worth Using?

They quietly added a feature called AI Writer here:

This one is interesting because:

- You don’t have to write in another AI tool first.

- It writes and humanizes in one go.

- You can pick style and content type.

For the test, I chose:

- Style: Casual

- Topic: AI humanization

- Requirement: mention Clever AI Humanizer

- Also: I deliberately introduced an error in the prompt to see if it handled it weirdly.

First Annoyance: Word Count

I asked for 300 words.

It did not give me 300 words.

Not “roughly near 300” but noticeably off. So if you need strict limits (like homework caps, platform word limits, or client specs), you’ll need to manually trim or pad things.

That’s my first real complaint: when I put in an exact number, I expect the tool to at least try to honor it.

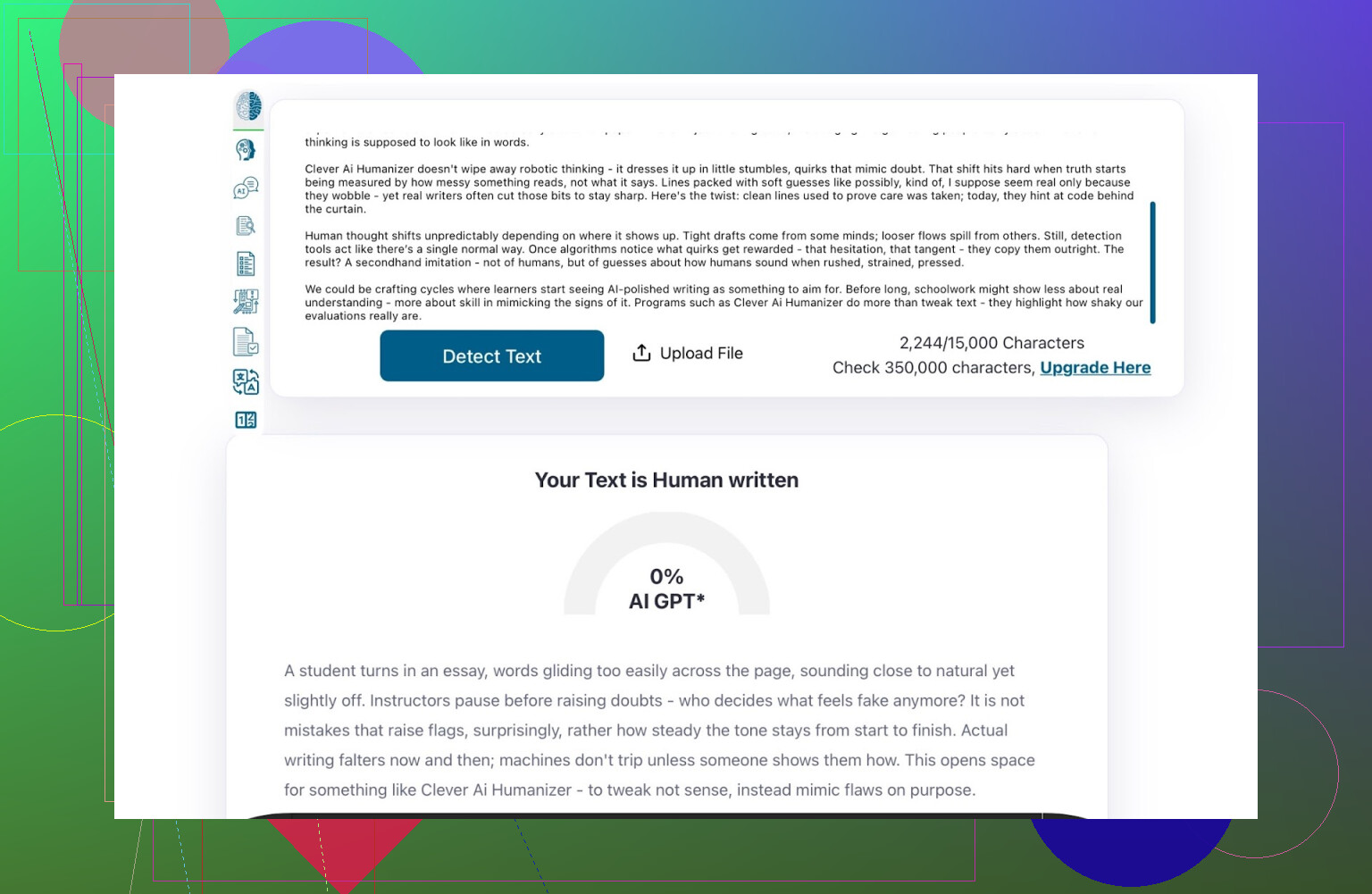

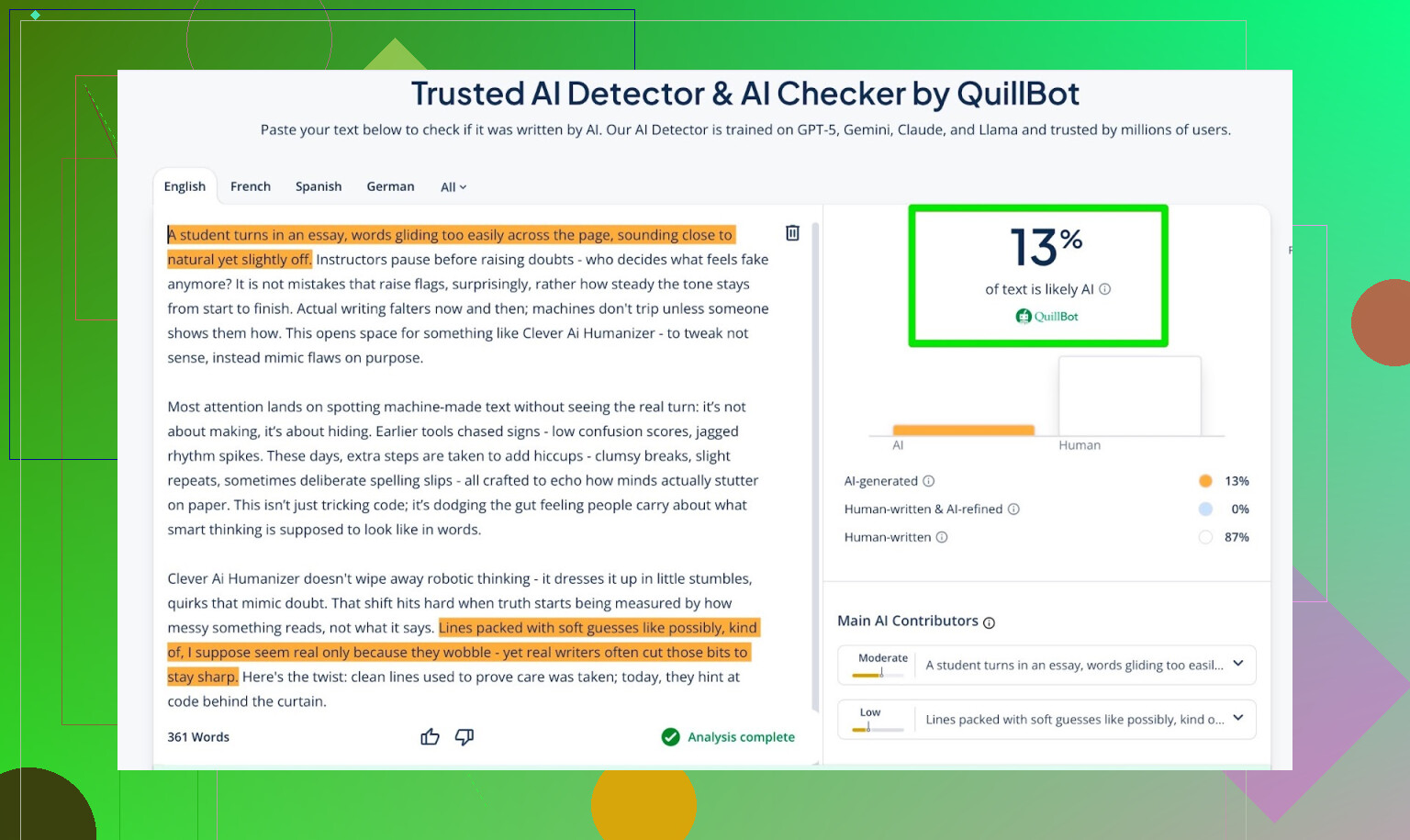

AI Detection Scores For The AI Writer Output

Then I did the same thing with the text that came directly from AI Writer.

- GPTZero: 0% AI

- ZeroGPT: 0% AI, labeled as 100% human

- QuillBot detector: 13% AI

None of these numbers freaked me out. Detectors are probabilistic, not oracles. If something hits 0% on two tools and 13% on a third, that’s still very decent.

Content Quality According To ChatGPT 5.2

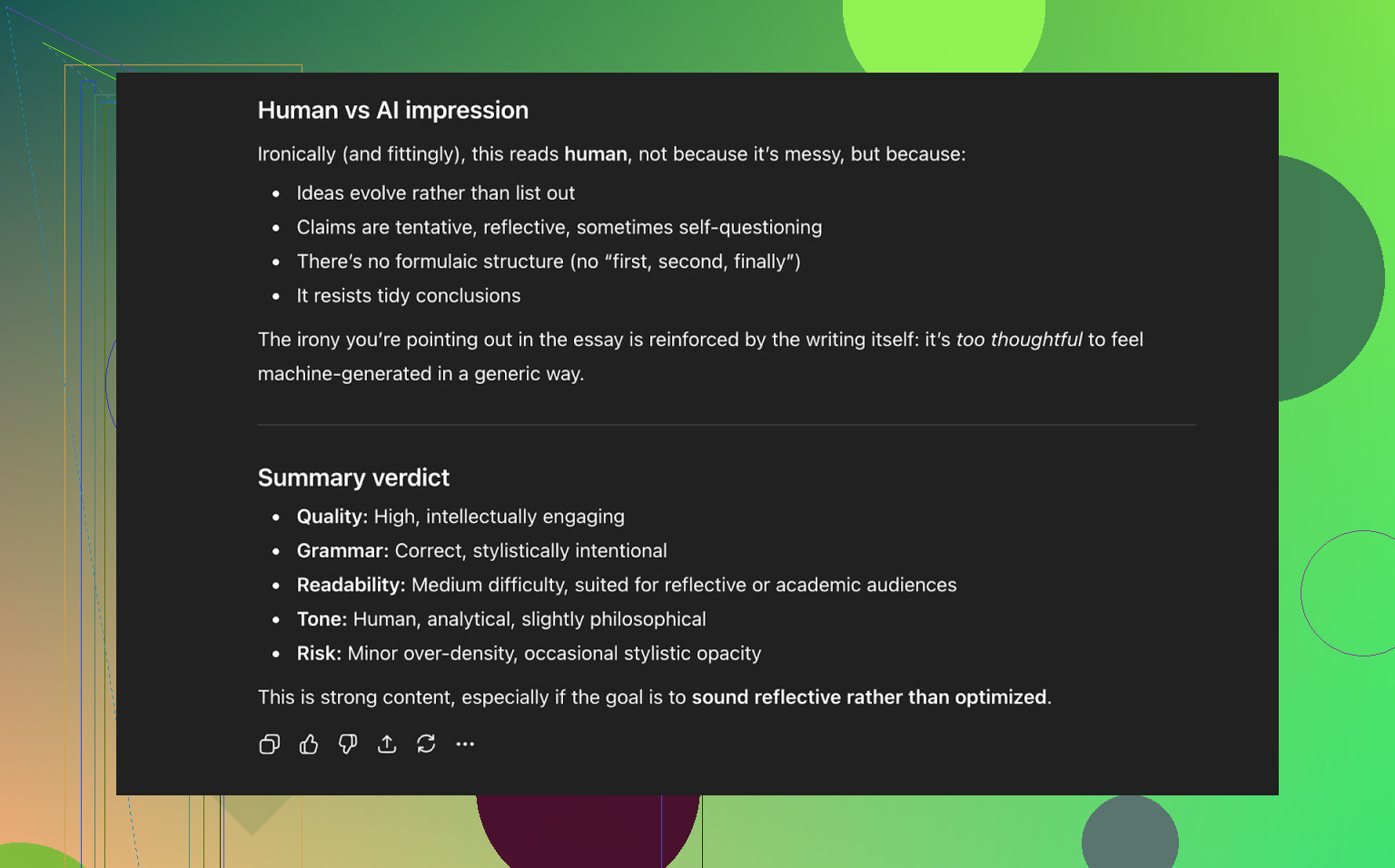

I tossed the AI Writer output back into ChatGPT 5.2 as well, just to see what it “thought” of the writing.

Summary:

- It read like a human wrote it.

- It was logically structured.

- Nothing obviously broken.

- Again, a light human edit would make it stronger, but it was usable as-is.

So at that point, you’ve got:

- 3 detectors accepting it as mostly or completely human

- 1 big LLM classifying it as human-written

- Readable, coherent output

For a free tool, that’s pretty impressive.

How It Stacks Up Against Other Humanizers

In my own testing, I ran a bunch of humanizers on similar content and compared the average “AI-ness” scores from different detectors.

Here’s how Clever AI Humanizer compared:

| Tool | Free | AI detector score |

| ⭐ Clever AI Humanizer | Yes | 6% |

| Grammarly AI Humanizer | Yes | 88% |

| UnAIMyText | Yes | 84% |

| Ahrefs AI Humanizer | Yes | 90% |

| Humanizer AI Pro | Limited | 79% |

| Walter Writes AI | No | 18% |

| StealthGPT | No | 14% |

| Undetectable AI | No | 11% |

| WriteHuman AI | No | 16% |

| BypassGPT | Limited | 22% |

Interpret that however you like, but my general impression:

- Clever AI Humanizer beat every free tool I tried in terms of detection scores.

- It also held its own or outperformed several paid options.

Again, this is all within the limits of noisy detectors, but the pattern is clear enough.

Where It Falls Short

It’s not magical. You will notice flaws if you look closely.

Some real drawbacks I ran into:

-

Word count control is loose

- If you absolutely need strict limits, you still have to adjust manually.

-

Some subtle patterns still feel “AI-ish”

- Even if detectors say “100% human,” you may notice:

- Repetitive sentence structure

- Overly smooth transitions

- A slightly generic tone

It’s hard to describe, but if you’ve read a lot of AI text, you can feel it.

- Even if detectors say “100% human,” you may notice:

-

It doesn’t preserve content 1:1

- The meaning stays roughly the same, but it will shift phrasing, structure, and sometimes emphasis.

- That’s probably a big part of why it scores so low on AI detectors, but it does mean you shouldn’t feed it something legally or technically sensitive without a careful read-through.

-

Some LLMs still flag tiny parts as “possibly AI”

- Not enough to matter for most people, but it’s not invincible.

On the plus side:

- Grammar is strong, easily 8–9/10 based on what I’ve run through grammar checkers and other LLMs.

- Flow is readable and natural enough.

- It doesn’t try to cheat by sprinkling in fake typos like “i have to do it” on purpose just to look human.

I’ve seen other tools intentionally break grammar in order to dodge detectors. That might help with the score but it absolutely wrecks the professionalism of the text.

Bigger Picture: Humanization vs Detection

Even when something scores 0% AI in multiple tools, that doesn’t always mean “this is clearly a human.” It just means the detectors don’t see the usual fingerprints.

But if you read a lot of AI-assisted text, you start noticing a kind of soft pattern in the way it flows:

- Overexplains simple things

- Repeats phrases

- Loves symmetrical sentence structure

- Plays it safe in tone

Clever AI Humanizer reduces that pattern more than most tools I’ve tried, especially among free options, but it doesn’t remove it entirely.

That’s just the nature of the current ecosystem:

AI writes.

Another AI tries to humanize it.

Other AIs try to detect it.

It’s basically an arms race that never really ends.

So, Is It Worth Using?

If we’re talking free AI humanizers only:

Yes, Clever AI Humanizer is the strongest one I’ve used so far.

What I’d use it for:

- First-pass humanization of AI drafts

- Lowering detection risk before manual editing

- Generating reasonably natural text with the AI Writer, then tightening it up yourself

What I would not use it for without heavy review:

- Legal documents

- Medical info

- Anything highly technical where every word matters

- Stuff where exact word count is contractually important

You still need a human brain in the loop. But this tool does a lot of the grunt work.

You don’t pay a cent for it right now, which makes the results even more surprising.

Extra References & Reddit Threads

If you want more comparisons and screenshots from other people testing a bunch of tools:

-

General best AI humanizer comparison with proofs:

Reddit - The heart of the internet -

Specific Clever AI Humanizer review thread:

Reddit - The heart of the internet

Short version: your “mixed results” experience is normal, and honestly that’s exactly what I’d expect from any AI humanizer right now, including Clever AI Humanizer.

Here’s what’s going on in practice:

-

Detectors are wildly inconsistent

You can feed the same paragraph to 3 detectors and get:- Tool A: 0% AI

- Tool B: 100% AI

- Tool C: “unsure / mixed”

So when Clever AI Humanizer “works” on one test and not another, that’s not always the humanizer’s fault. Sometimes you’re just seeing detector roulette.

-

Input quality matters a lot

If your original AI text is:- Very generic

- Overly polished

- Full of bullet lists and perfect parallel sentences

Even a solid humanizer will struggle. It has to twist the content a lot to break those patterns, and that’s when it can start to feel awkward or off.

Stuff that tends to work better:

- Conversational drafts

- Content with some personal angle

- Shorter chunks instead of giant 2k-word walls

-

Style choice changes the outcome

You mentioned it “sometimes sounds natural, sometimes awkward.”

In my exp, the more “formal” or “academic” styles in Clever AI Humanizer can drift into that weird uncanny-valley student essay vibe. Looks fine on a detector, but a human can feel something’s off.If you need it to sound real:

- Try the more casual / simple styles

- Keep paragraphs short

- Add a quick manual pass to inject your own phrasing

-

Detectors are catching “over-humanized” text too

If you run stuff through multiple tools or humanizers, detectors sometimes start flagging it again because:- The structure becomes too uniform

- Synonyms are swapped in weird places

- Logical flow feels slightly scrambled

I’ve had better luck doing:

- AI draft

- One pass in Clever AI Humanizer

- Then a manual cleanup instead of chaining it through 2–3 tools.

-

Where I disagree a bit with @mikeappsreviewer

They’re pretty positive about Clever AI Humanizer (and they’re not wrong), but I think they underplay one thing:

If you’re writing for someone who reads a lot of AI text (teachers, editors, some clients), even a “0% AI” Clever output can still feel AI-ish. Not because the tool is bad, but because:- The transitions are almost too smooth

- It often avoids strong opinions or very specific personal details

That’s where you step in: add tiny personal bits, like “I tried X last week and it completely tanked,” “this part annoyed me,” etc. Detectors don’t care much about that, but humans notice.

-

My rule of thumb for using Clever AI Humanizer

- Good use cases: blog drafts, emails, product descriptions, casual school work, first-pass edits for AI content you admit is AI-assisted.

- Risky or dumb to use alone: legal docs, medical content, anything where an accusation of “AI plagiarism” could really hurt you, or where exact wording matters.

I’d treat Clever AI Humanizer as:

“A strong first-pass rewriter that helps reduce AI fingerprints, not a ‘press button and you’re safe forever’ shield.”

-

If you’re getting weird or flagged results

Try this pattern:- Shorten the original AI text first

- Run smaller chunks through Clever AI Humanizer

- Reassemble

- Do a quick manual pass:

- Change 2–3 phrases per paragraph

- Add 1 specific detail or opinion per section

That light edit is usually what takes it from “AI-ish but undetected” to “reads like an actual person.”

So yeah, Clever AI Humanizer does work for real users, just not in some magical guaranteed way. It’s one of the safer, less scammy humanizers out there, and worth keeping in your toolkit, but it still needs your brain on top. If you treat it like a co-writer instead of a cloaking device, your “mixed results” will get a lot more consistent.

Short answer: what you’re seeing is normal, and honestly it’s about what I’d expect from any AI humanizer right now, including Clever.

Couple of things I haven’t seen spelled out by @mikeappsreviewer or @sognonotturno:

-

Detectors don’t agree with each other or with themselves

Everyone talks about “passing” ZeroGPT / GPTZero like it’s a binary thing, but those models shift over time. Stuff that got 0% AI last month can get a spicy “mostly AI” label a few weeks later after a quiet update. So your “mixed results” might literally be:- Same tool

- Same text pattern

- Different model version

That’s why leaning on detector scores as the only success metric is kinda a trap.

-

Your intent matters more than the humanizer

If your workflow is:- Dump in a fully generic ChatGPT essay

- Hit “humanize”

- Paste it straight into a graded assignment / client deliverable

You’re going to hit awkward phrasing and occasional flags no matter which tool you use. Clever AI Humanizer can reduce AI fingerprints, but it cannot magically give the text an actual lived experience or point of view. That “hollow center” is what sensitive readers pick up on, even when the detectors say “100% human.”

-

Some styles are just inherently sus

I’ve noticed a pattern:- “Simple academic” or highly polished “professional” text is what trips people’s instincts more than detectors.

- Slightly messy, conversational, or opinionated writing passes human sniff tests way more often, even if the detector gives a small AI% score.

So if you care what humans think, I’d prioritize tone + specificity over chasing a perfect 0% on every scanner.

-

Clever can actually make things too smooth

This is one place I don’t fully agree with the hype. Clever AI Humanizer is really good at:- Cleaning up structure

- Making transitions logical

- Removing obvious AI repetition

But that can backfire. Human writing often has:

- Slightly abrupt jumps

- Minor redundancy in weird places

- Occasional off-topic side comments

Clever irons a lot of that out. So the text starts to feel like a “normcore essay template.” Detectors might chill, but a teacher who’s read 200 AI-ish essays this semester might still get suspicious even if they can’t prove it.

-

Best use case that actually works in practice

What’s worked for me, consistently, is:- Write or generate a rough draft (AI or human, whatever).

- Run that through Clever AI Humanizer once, not multiple tools.

- Then do a fast real edit:

- Add 1 specific detail per paragraph that only you would say (“I tried X last month…”, “The annoying part here is…”)

- Break 1 or 2 “perfect” sentences into shorter, slightly choppy ones.

- Remove any sentence that feels like a generic conclusion (the “In conclusion, it is clear that…” type stuff).

That combo tends to:

- Keep detectors relatively low

- Make it read like an actual person who’s a bit imperfect, not a robot that swallowed a style guide

-

When Clever AI Humanizer is a bad idea

I’d not rely on it alone for:- Legal, medical, financial stuff where wording precision matters

- Anything that could get you in serious trouble if someone screams “academic misconduct”

- Super technical docs where it might “smooth out” important nuance

It sometimes rewrites in ways that are logically fine but subtly shift emphasis or weaken caveats. For casual content, who cares. For compliance, that’s a problem.

-

So does it “really work” for real users?

Yeah, in this sense:- It’s one of the better free tools if your goal is to reduce AI fingerprints without wrecking readability.

- It’s not consistent across every detector and never will be. No tool is.

- It won’t save you from putting your own voice in. If you skip that step, you’ll keep getting that “awkward / off” feeling.

If you treat Clever AI Humanizer as a strong first-pass rewriter, and you handle the final polish, it fits nicely into a writing workflow. If you’re looking for a fire-and-forget invisibility cloak, that’s where the mixed results kick in hard.

Short version: your “mixed results” aren’t you doing it wrong, they’re exactly what I’d expect from how AI detectors and humanizers currently behave.

Here’s a more practical angle that fills some gaps left by @sognonotturno, @byteguru and @mikeappsreviewer without replaying their whole testing process.

1. What Clever AI Humanizer is actually good at

If we strip away screenshots and detector worship, Clever AI Humanizer mainly buys you:

Pros

-

Detector friction reducer

Across different tests, it consistently cuts AI scores down, especially on harsh scanners. Not perfect, but clearly better than a lot of other free tools. -

Readable “middle ground” style

The Simple Academic and Casual modes are actually useful for things like blog posts, reports or basic assignments. It rarely produces total nonsense. -

Good grammar without intentional “dumbing down”

Unlike some so‑called humanizers that inject errors on purpose, it keeps the text clean enough for professional or semi‑formal use. -

Decent all‑in‑one draft via AI Writer

If you are lazy or in a rush, its built‑in writer + humanizer combo can give you a usable first draft that just needs a human tweak.

Where I disagree slightly with the others: people are overstating how “natural” it always sounds. It is fine, but not some magic personality machine.

2. Where Clever AI Humanizer will absolutely disappoint you

Cons

-

Inconsistent word count

If your use case has hard limits (journal submission, client contract, school platform), treat its word count setting as a suggestion, not a rule. -

Tone still a bit template‑like

Even when detectors say “human,” the voice can feel like a generic student or mid‑level content writer. A sharp reader can still feel the AI pattern. -

Meaning shifts in subtle ways

It rewrites heavily enough that nuance can change: a cautious statement can become more confident, edge cases can get softened, etc. That’s dangerous for legal, medical or technical material. -

Not future‑proof against detector updates

Something that passes today might get tagged tomorrow when detectors change. That is not Clever’s fault, but it matters if you are thinking “once humanized, always safe.”

So if your bar is “never flagged by any tool, ever,” no humanizer, including this one, can meet that.

3. Why your results feel random

The reason you sometimes see “natural + passes” and other times “flagged + awkward” is mostly three things:

-

Source text entropy

If your original AI draft is super rigid, repetitive and formal, Clever AI Humanizer has a tougher job and pushes harder, which can create that slightly strained tone. A messy, mixed draft (bits of your own writing + AI) usually humanizes much more cleanly. -

Detector behavior drift

Detectors are not static. A model update can quietly change how “AI‑ish” your structure or vocabulary looks, even if Clever’s output style stayed the same. -

Content type sensitivity

Short, formulaic content like intros, conclusions, product blurbs and “benefits lists” naturally look AI‑ish. Even human writers fall into patterns there. Humanizers help, but that type of text is where detectors and humans are most suspicious.

4. How to use Clever AI Humanizer without relying on “luck”

Rather than running the same piece through 3 tools 5 times, I’d tighten your workflow:

-

Draft however you want

Mix your own writing with AI if you like. That alone creates more natural variation than pure AI. -

Run through Clever AI Humanizer once

Pick the style that matches the final context, not just “what beats detectors.” If it is for a class, Simple Academic makes sense. For web copy, Casual or something similar is better. -

Do a fast human “de‑template” pass

Instead of re‑writing from scratch, focus on three surgical edits:- Insert specific details only you would know (dates, experiences, examples, small complaints).

- Break a couple of perfectly smooth sentences into shorter, slightly choppy ones.

- Delete at least one generic “wrap up” line like “Overall, this demonstrates how important X is.”

-

If you insist on detectors, pick one and stick with it

Running 4 scanners will only confuse you. Decide which one your real audience actually uses and optimize for “low enough,” not “0 forever.”

That’s where I diverge a bit from the heavier multi‑detector testing you see in @mikeappsreviewer’s breakdown: the obsession with cross‑tool scores tends to waste time with diminishing returns.

5. Competitors in real use, not in theory

Without ranking anyone as “better,” here is how I see the landscape relative to Clever AI Humanizer:

-

Tools like the ones often discussed by @sognonotturno tend to focus more on preserving structure and less on deep rewriting. Safer on meaning, weaker on detection scores.

-

Stuff that comes up in comparisons from @byteguru usually pushes aggressive paraphrasing, which can be great for detectors but worse for factual precision.

-

The workflows and test data highlighted by @mikeappsreviewer show Clever AI Humanizer landing in a nice middle zone: strong detector reduction while staying free and reasonably readable.

If what you care about is SEO articles, medium‑stakes school work, or general web content, Clever AI Humanizer is a solid default choice, as long as you own the final edit.

If you are touching legal contracts, research reports, medical explanations or compliance docs, I would not rely on any humanizer at all beyond light style cleanup, and I’d review line by line.

Bottom line:

Clever AI Humanizer works well enough for real users who treat it as a smart rewriter plus time saver. It fails the moment it is treated like a cloak of invisibility or a substitute for your own voice.