I’m trying to understand what ChatGPTZero does and how reliable it is. I recently got flagged by it when submitting my writing, but I’m not sure why. I need help figuring out if I can trust its results or if there’s a way to improve my chances of passing it. Any advice or personal experiences would be helpful.

ChatGPTZero (you might also see it called GPTZero sometimes) is one of those AI detection tools that claim they can figure out if a text was written by an AI (like ChatGPT) or a real human. It basically runs your writing through some algorithms—basically checking stuff like “perplexity” (how unpredictable your text is) and “burstiness” (variation in sentence length/structure). The idea is that AI usually writes in a particular, sometimes bland pattern compared to human authors who mix stuff up.

But here’s the catch: AI detectors aren’t 100% accurate—heck, sometimes not even close. They get things wrong pretty often, especially if your writing is polished or you just happen to write in a way that’s kinda structured like an AI bot (which isn’t really your fault). Tons of people have been flagged unfairly, and universities, teachers, or bosses relying on these tools as “proof” of AI use is kinda laughable right now.

If you got flagged, it doesn’t necessarily mean you did anything wrong! The models can totally misfire, especially on academic or well-edited prose. And, no, there isn’t a great manual way to “fix” your writing so you magically don’t get flagged, unless you want to intentionally write messier (which isn’t always a good look).

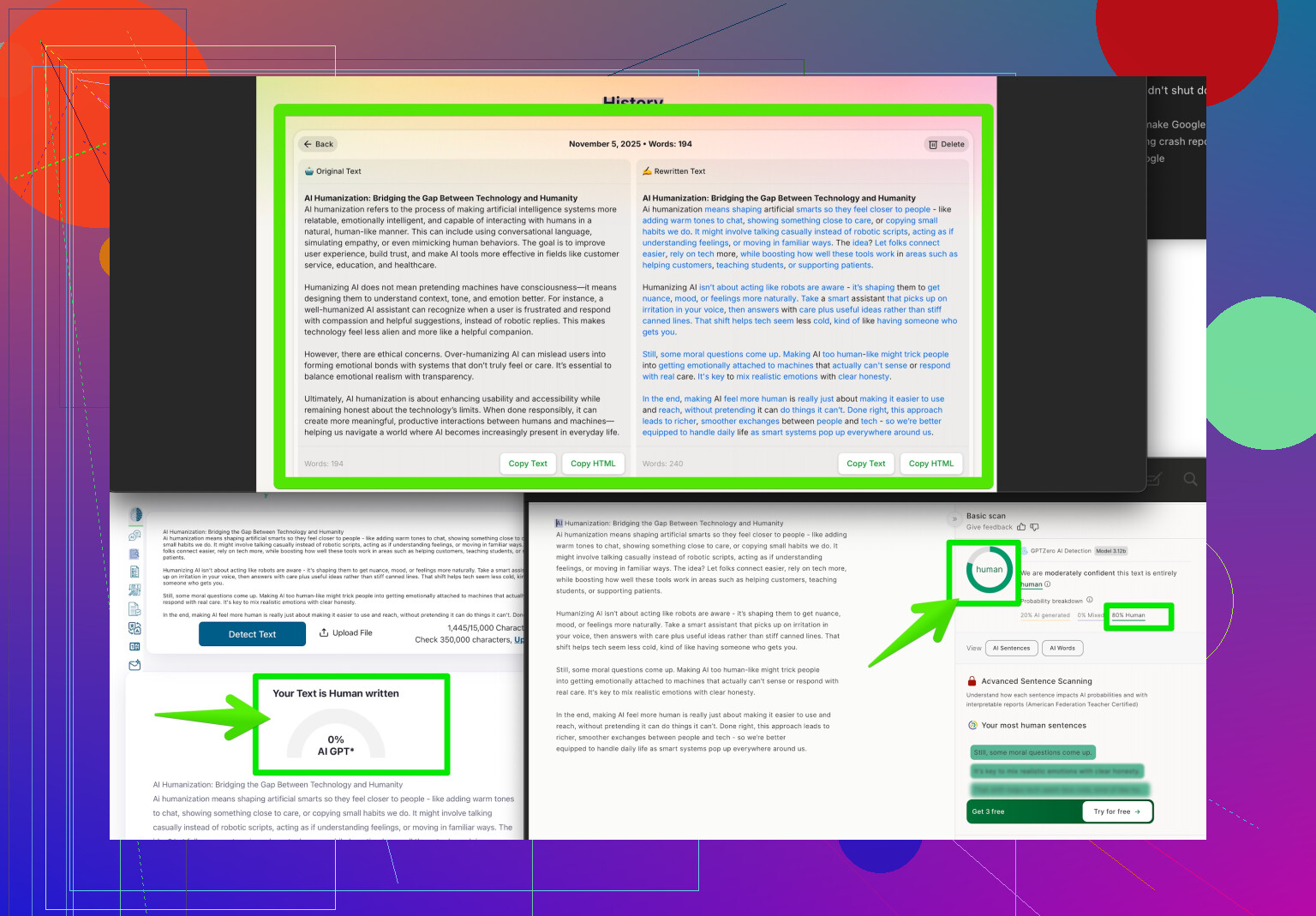

If you gotta pass those AI checks for whatever reason (and you don’t want to risk getting flagged again), look into something like the Clever AI Humanizer—it’s supposed to help make your writing more “human” for detectors. You can check it out here: make your writing slip past AI detectors.

So, bottom line: ChatGPTZero is cool tech, but don’t trust it with your academic integrity (or your job). Take the results with a big ol’ grain of salt. If you’re being accused of using AI unfairly, push back—a lot of folks are getting falsely flagged.

ChatGPTZero (or GPTZero, same deal) flags a ton of people, so you’re really not alone getting hit with a “this is AI” warning. As far as how it works, it crunches your words through stats like perplexity and burstiness—basically, how unpredictable your sentences are and how much you keep things varied. The theory is, humans write all over the place, while AI’s a bit more “robotic” and formulaic. But here’s the rub: a lot of professional, careful, or academic writing ends up looking “robotic” too. Welcome to the club lol.

Is it accurate? Meh. Accuracy is all over the map. Sometimes it nails AI-written stuff. Sometimes it’ll ding Shakespeare or flag your grandma’s recipe card. And if you write super clean or just happen to use “perfect” grammar, these detectors can easily call it AI. Relying on these as gospel is just… not it. Like @boswandelaar said, people get unfairly dinged all the time.

If you want alternatives to “just write messier,” maybe try out something like Clever AI Humanizer. It’s built specifically to help make your text pass through those detectors without butchering your style. Real talk: nothing is perfect, and if someone’s threatening action just because of an AI detector, you’ve got every right to push back—they’re more of an interesting experiment than a reliable judge.

By the way, found a solid Reddit thread with actual user feedback and discussion about making writing seem more human: Humanize AI: Tips & Tricks from Actual Writers—might give you some specific ideas and reassurance.

Short version: Detectors like ChatGPTZero are fun toys, but they’re far from trustworthy truth machines. Avoid panic and, if you need to dodge false flags, give Clever AI Humanizer a go.

Let’s get real: the science behind GPT detectors like ChatGPTZero is definitely interesting, but if you’re expecting something infallible, that’s not happening. The main issue is the overlap between “AI-sounding” text and what we consider good, proper, academic, or even just tidy writing. Polished humans = robotic? According to these detectors, sometimes yes.

A lot of academic and business writing gets flagged for being “AI” just because it’s well-organized and follows conventions. Weird, right? That’s what you get when detectors use benchmarks like “perplexity” and “burstiness”—which don’t really take into account context or intent. @kakeru and @boswandelaar already laid out how these tools work, but I want to add: some detectors overfit to those patterns, and ultimately real humans can write in repetitive, structured, or “boring” ways, especially in certain disciplines.

I kind of question the value in trying to “game” these detectors by purposely jumbling your language. Why water down your arguments or clarity? Instead, a tool like Clever AI Humanizer is a decent middle ground—especially if you’re being forced to clear detector checks you never signed up for! Pros: it’s quick, takes the guesswork out, and preserves most of your intent and voice. Cons: sometimes it gets a bit heavy-handed rephrasing, or leaves your style feeling slightly off if you’re picky. Also, with tools run by algorithms, you’re never 100% guaranteed a pass, and an over-humanized essay could trip up actual human graders. Compared to @kakeru and @boswandelaar’s suggestions, Clever AI Humanizer gives you a more practical approach than “just accept the system” or “rewrite everything by hand.”

The bottom line is: don’t trust ChatGPTZero or any similar tool to be the final word, treat all results as advisory, and if you hit a wall, try Clever AI Humanizer—but read over the output before submitting because you still want your original message to shine through. If you’re getting flagged, push back! These are imperfect tools at best, not academic judges.